Reza Habibi

About Me

DigitalOcean deleted everything. This is my new website. I’m working on it … :)

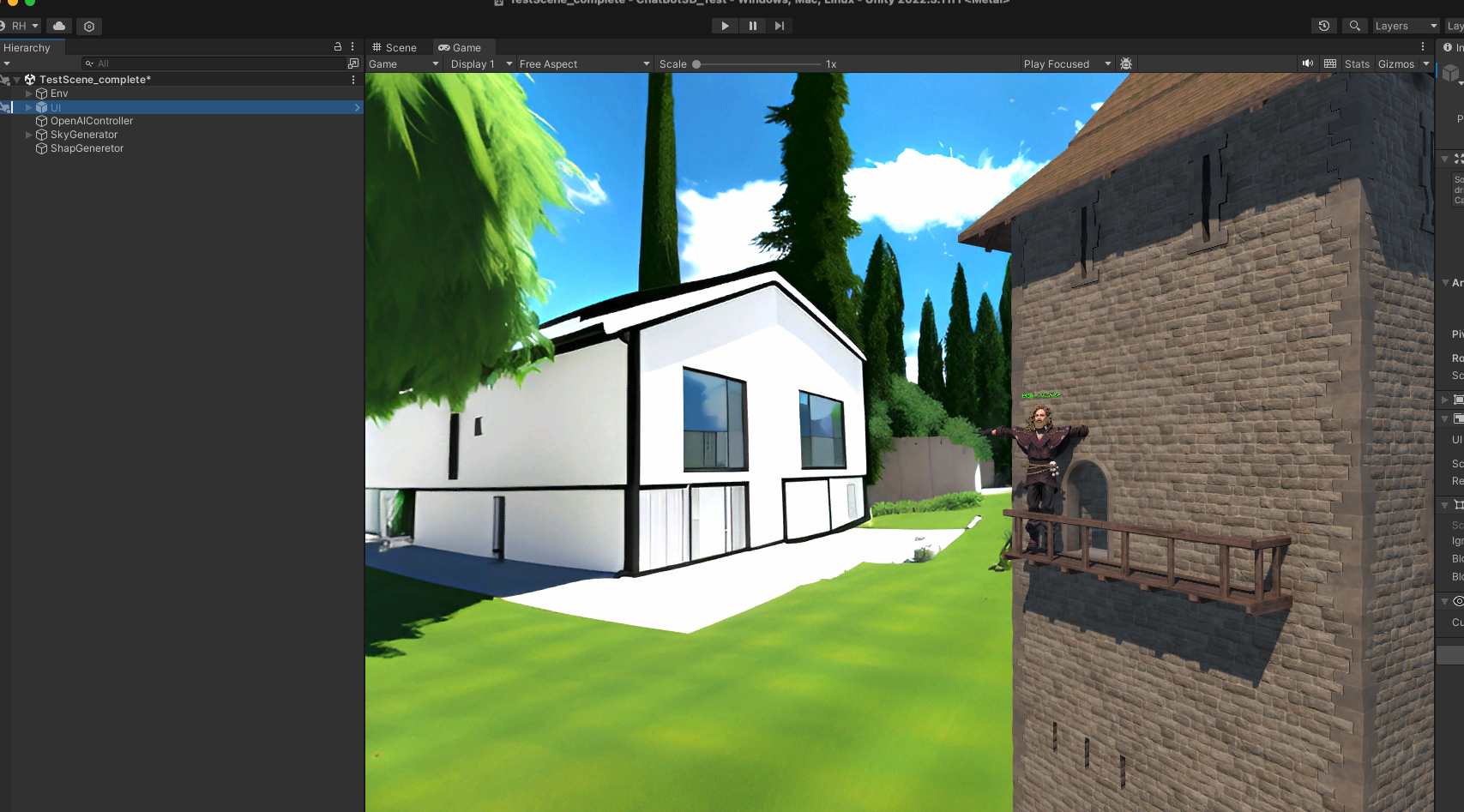

I’m working on the broader question of “How to achieve mutual understanding between humans and machines (LLMS), specifically focusing on how we can create tailored interactions with machines and enhance machine understanding of these interactions through visual communication. Additionally, I’m exploring the topics of Human-AI alignment and AI education.

I hold Bachelor’s and Master’s degrees in Computer Science, emphasizing HCI, NLP, and immersive, interactive environments. My contributions to esteemed venues such as CHI, CHI Play, FDG, HCII, ACII, and AAAI are documented on my Google Scholar profile. Beyond my academic pursuits, I channel my creativity into crafting art pieces that seamlessly blend music, digital sculpture, and technology. Alongside these artistic endeavors, I have been involved in motorsports as both a driver and engineer for years.

News

- [Feb 2024] I’m happy to announce that I’ll present my poster on Learning and GenAI literacy.

- [Jan 2024] I’m happy to announce that our paper “Backseat Gaming” An Interview Study on Co-Regulated Learning within a Collegiate Male Esports Community accepted at CHI 2024.

- [Jan 2024] I am pleased to present my recent research on GenAI and learning at the UCSC Humanities Institute.

- [Aug 2023] I am pleased to announce that I have been appointed as a graduate student representative on the Committee on Academic Freedom (CAF).

- [July 2023] I am delighted to announce my collaborative art project with SF OPERA , which explores the innovative realms of AI, BCI, and immersive tech.

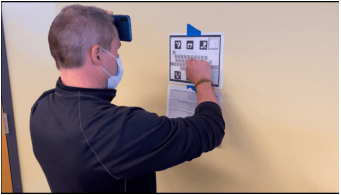

- [Feb 2023] Our paper about first-year student stress measurements using ARG was accepted to FDG 2023.

- [2023] Our paper about modeling student affective behaviors in RPG Game was accepted to ACII 2023 - MIT.

- [2023] Our paper about Empathetic AI for Empowering Resilience in Students Using Games was accepted to AIIDE 2023.

- [2022] Our paper about the Self Regulated Learning Perspective on Computational Support was accepted to CHI-PLay 2023.

- [2022] Our paper about A Data-Driven Design of AI ARG for first-year students was accepted to HCII 2022 BEST PAPER.

Posts

Projects

Publications

Loading … Check my GoogleScholar